In the realm of artificial intelligence, Microsoft’s unveiling of the Mu language model marks a significant evolutionary leap. With the recent rollout of Windows 11 features, the tech behemoth has embedded Mu directly into the operating system’s settings, allowing users to articulate their desires in natural language and letting the AI handle the nitty-gritty. While many tech companies flirt with AI capabilities, Microsoft has jumped the gun with Mu, demonstrating that it’s not merely about innovation but about executing it with surgical precision.

This innovation propels machine learning from the theoretical into the practical, enabling seamless interaction between users and machines. Mu is specifically engineered to work on-device, showcasing a promising shift in technology towards localized processing. Not only does this promise enhanced speed and performance, but it also raises pertinent questions about data security and user privacy. In an age where cloud computing dominates, Mu’s ability to function entirely on compatible Copilot+ PCs via the onboard neural processing unit (NPU) invites scrutiny but also instills confidence. With on-device processing comes the reduction of data transmission, a key concern in our increasingly monitored world.

The Technical Marvel of Mu

Mu boasts an impressive architecture, powered by a transformer-based encoder-decoder model and housing 330 million token parameters. This sophisticated setup democratizes AI, making it a palatable solution even for devices that aren’t powerhouses in computing. Yet, Microsoft insists that this model isn’t just about being smaller but being smarter. The company claims Mu delivers results akin to that of larger models like Phi-3.5-mini, even when it’s a fraction of the size. The stark reality, however, reveals an underlying dichotomy: will smaller equal smarter in the long run, or are we merely trading power for convenience and computational efficiency?

Moreover, the extensive optimization techniques employed—ranging from task-specific enhancements to low-rank adaptation methods—provide Mu the outdoor training wheels it needs to thrive. The compression of data processing into something manageable undoubtedly leads to faster response times, a significant blessing in the fast-paced tech world. The burnished surface of Mu reveals glimmers of potential but also a glaring need for continual refinement as its role within our digital ecosystem grows.

Navigating the User Experience with AI

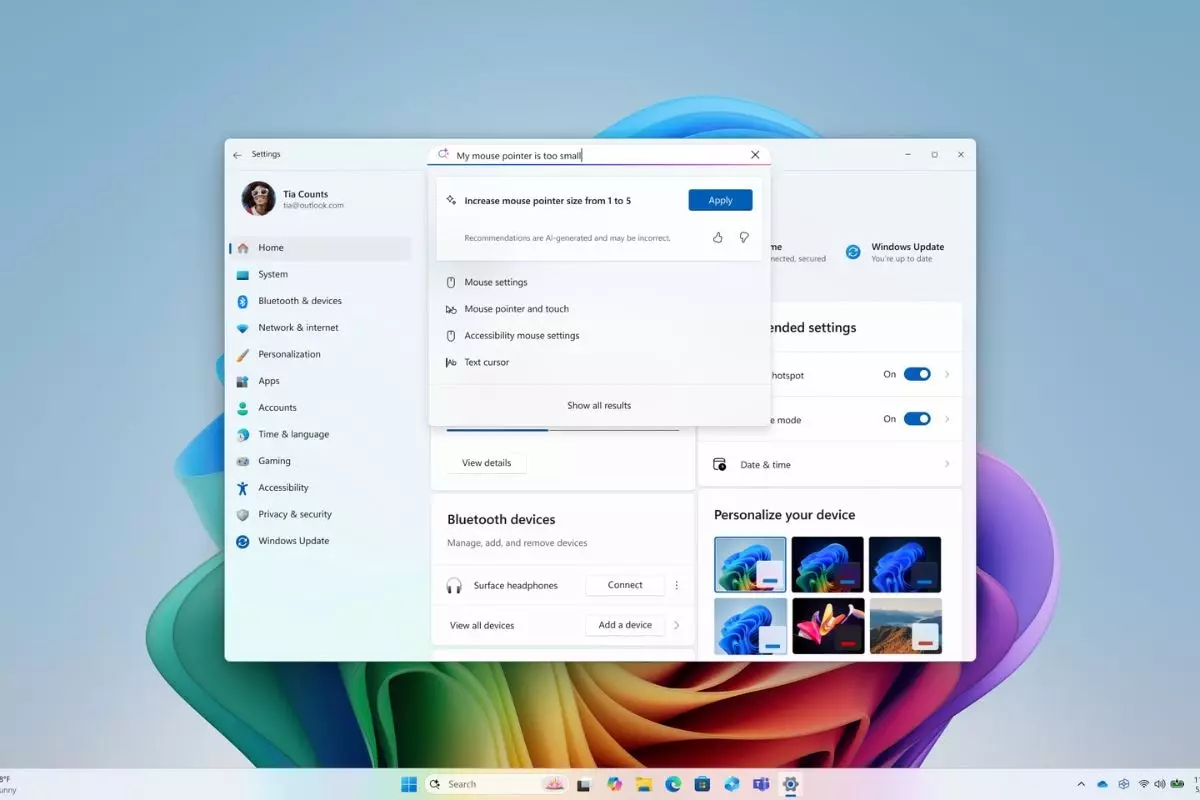

Mu’s performance brings forth a salient truth: user experience (UX) cannot be an afterthought in the deployment of AI models. Users often express frustration with vague queries yielding insufficient results. Perhaps the more shifting focus on multi-word input phrases comes at the expense of a seamless experience. Are users willing to adjust their inquiry style, or will they resist and revert to traditional methods when faced with ambiguity? Microsoft’s quick acknowledgment of the gap between keyword-based responses and user intent signals a readiness to engage in a complex dialogue with its audience. To maintain relevance, gratifying users’ needs through intuitive interaction mechanics is an incessant journey.

By scaling up its training data exponentially—from 50 to hundreds of settings—Microsoft has shown commitment to curtail the frustrations associated with conventional operating systems, making strides in ease of use and accessibility. Yet this raises another potential pitfall. The effective training of an AI lies heavily dependent on the contextual richness of its data. The model caters primarily to frequently used settings, questioning whether this represents a true understanding of user needs or merely a simplistic approach to problem-solving.

Finding a Balance in Efficiency and Control

As the tech landscape races forward, one cannot ignore the looming specter of control—who wields it and how it is exercised. With Microsoft’s Mu, while efficiency enhances user capability, we must remain vigilant about the ramifications of such advancements. The AI’s ability to automate without oversight or complete understanding could lead to scenarios where critical human involvement is diluted. This raises a pertinent question: how do we trust an entity that learns from our actions but remains bound by invisible algorithms?

In the pursuit to humanize technology, academics and tech innovators alike must be cautious of retreating too far into reliance on AI tools like Mu. As our technical capacities strengthen, so too does the responsibility to remain critical of the tools we embrace. In this pivotal moment, Mu could not only redefine how we interact with technology but challenge the fabric of the relationship between humans and machines, highlighting the thin, sometimes frail line that separates assistance from dependence.

Leave a Reply